Model Governance & Risk Management: Keeping Your AI Model Governance Under Control

When you’re shepherding a portfolio of production-grade AI models, you live in a paradox: the very engines that drive innovation can just as easily undermine it. Each new model unlocks sharper forecasts or leaner workflows, yet every deployment quietly widens your exposure—accuracy can drift, bias can creep in, and compliance gaps can open overnight. That’s why rigorous model governance and risk management aren’t optional add-ons; they’re the guardrails that let you scale AI with confidence instead of crossing your fingers.

AI Model Governance Key Takeaways

- Hidden risks — unchecked drift, bias, and compliance gaps quietly chip away at an AI model’s value.

- Model sprawl — when dozens of unsupervised models pop up, inconsistency surges and risk multiplies.

- Robust frameworks — disciplined version control, MLOps pipelines, and automated drift-and-bias monitoring keep models dependable.

- Real-world lessons — the teams that govern proactively avoid costly missteps and reputational hits.

- Proactive management — treat AI with this rigor and it shifts from a potential liability to a durable strategic asset.

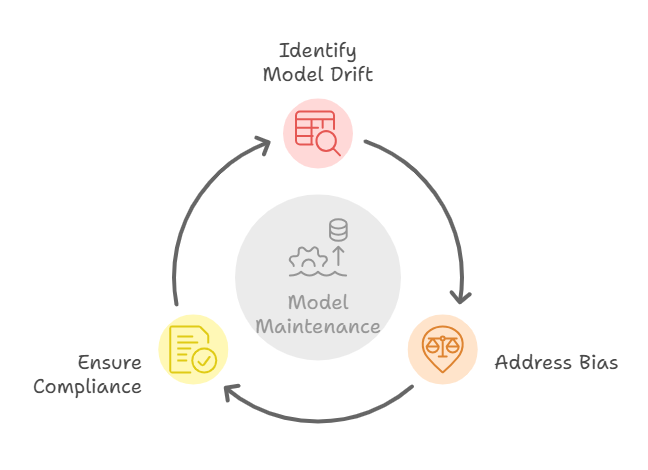

Why Robust AI Model Governance Prevents Drift and Bias

Every time you ship a model, you’re essentially betting that yesterday’s patterns will still explain tomorrow’s reality. But tomorrow rarely cooperates:

- Model drift: Consumer habits shift, markets morph, and new data feeds arrive. A model that once posted 95 % accuracy can sink to coin-flip territory if you aren’t watching.

- Bias: Train on lopsided history—say, old hiring data—and you may silently favor the same over-represented groups, opening the door to ethical backlash and lawsuits.

- Compliance gaps: Privacy rules, transparency mandates, and consumer-rights laws keep evolving. If your governance can’t show how a model reaches its decisions, you’re facing fines, rollbacks, or both.

In short, the bet only pays off when you have guardrails that catch drift, surface bias, and prove compliance before regulators or customers do.

Drift, bias, and compliance gaps will surface—it’s only a matter of time. A disciplined governance stack flags them early and neutralizes them long before they become front-page scandals or budget-busting fines.

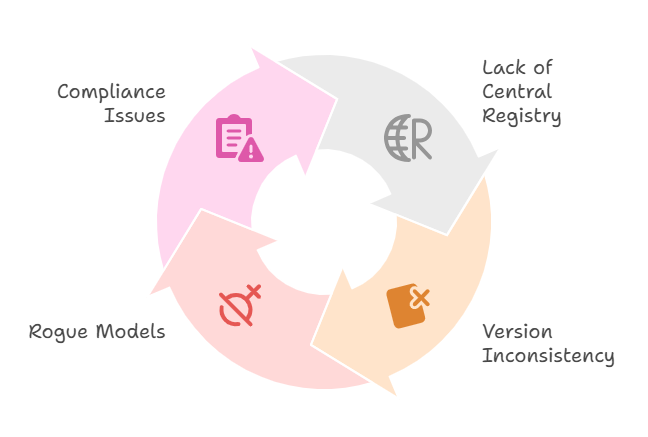

The Dangers of Uncontrolled Model Sprawl

Left unchecked, experimentation becomes model sprawl. Marketing launches a churn predictor, finance prototypes a credit-risk scorer, operations codes an inventory forecaster—and suddenly you’re wrangling a zoo of algorithms, each with its own data pipeline, drift profile, and version history. Without a unified governance playbook, that tangle turns invisible risk into inevitable cost.

Model Sprawl = Blind Spots

- Invisible lineage: No central registry means you can’t tell who last touched a model, what data fed it, or whether that version is still in prod.

- Version drift: One team might rely on a year-old model while another enjoys a freshly retrained upgrade—creating conflicting decisions and eroding trust.

- Rogue models: Abandoned predictors keep shaping prices, credit limits, or forecasts with zero monitoring and increasingly bad logic.

If you can’t rattle off which models are live, who owns them, and how they’re performing, you’re operating on faith—not facts. The result is inconsistent decisions, shaky forecasts, and a leadership team that starts questioning the entire AI program. In short, uncontrolled sprawl doesn’t just clutter your architecture; it drains confidence and invites operational and reputational fallout.

AI Model Governance Frameworks & Tools

Reining in model sprawl—and the risks it drags along—takes a purpose-built mix of AI-ready processes and platforms:

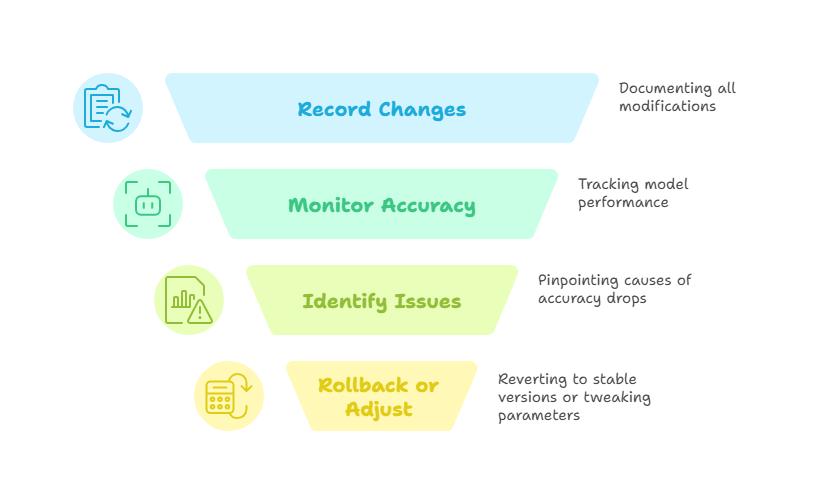

Model Versioning

Log every tweak—capture the dataset snapshot, the hyper-parameters you tuned, and the precise deployment timestamp. If accuracy falls off a cliff, those version tags let you roll back in seconds or zero in on the exact change that caused the slip.

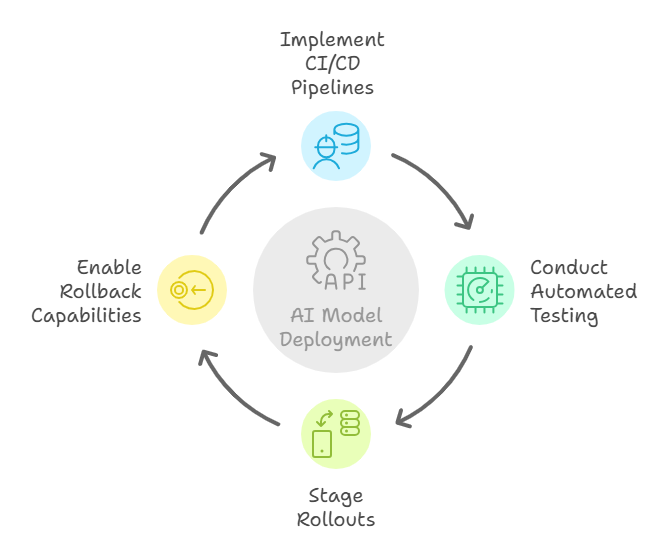

MLOps Platforms

Treat each AI model the same way you treat production code: run it through a purpose-built CI/CD pipeline for machine learning. Automated unit and integration tests vet the data and logic, staged rollouts limit blast radius if a flaw slips through, and one-click rollbacks ensure that any regression or outage is short-lived.

Drift & Bias Detection

Put monitoring on autopilot: track core performance signals—accuracy, precision, recall—right alongside fairness metrics such as demographic parity. The instant a model drifts or starts quietly favoring one group, automated alerts surface the issue so you can intervene before it impacts users or compliance.

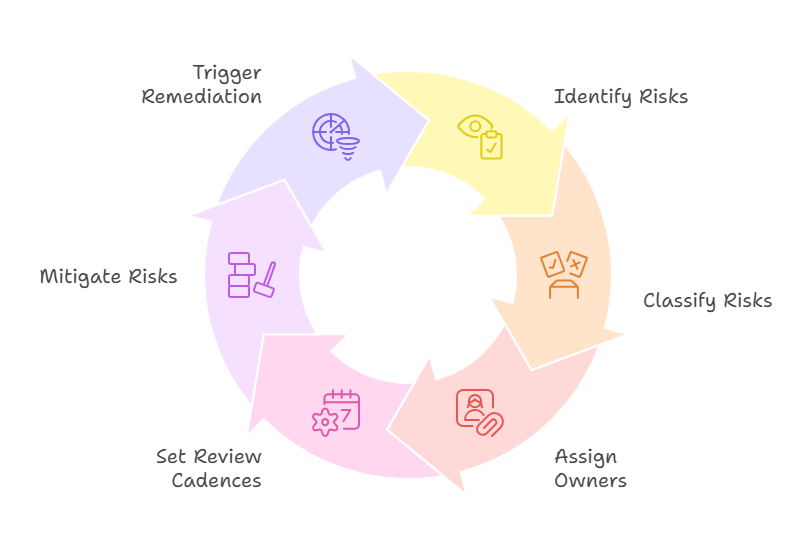

AI Model Governance Risk Management Framework

Put a structured risk-management playbook in place: formally identify, label, and rank every legal, reputational, operational, and ethical risk tied to each model. Give each risk an owner, schedule regular reviews, and link clear action triggers to hard metrics—for instance, “If accuracy dips below 90 %, automatically launch a retraining workflow.”

When you stack these safeguards, you create a clear, auditable AI lifecycle: models evolve in lockstep with the business, drift is flagged early, and every stakeholder can treat AI as a compounding asset—never a hidden liability.

Real-World AI Model Governance Case Studies

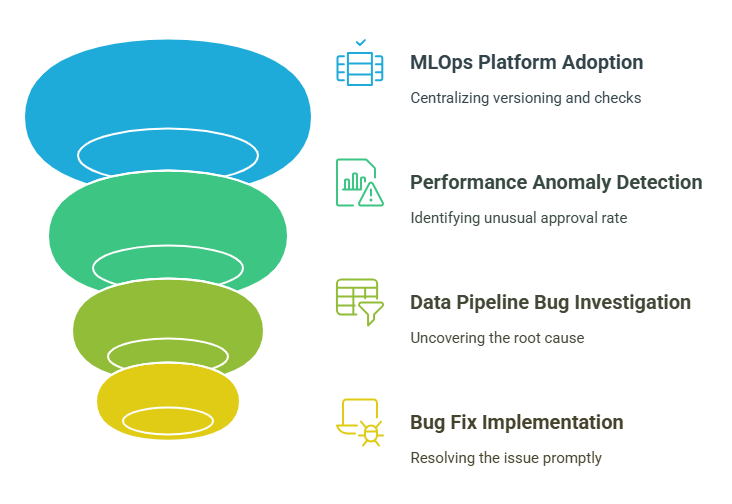

Financial Services Firm

Picture a bank juggling three credit-scoring models, each built by a different analytics team. Once they introduced an MLOps platform to centralize versioning and automate performance checks, they spotted—within days—an odd spike in one model’s approval rate. A quick dive traced the jump to a data-pipeline bug. Because their new governance controls surfaced the anomaly early, they fixed the glitch before a single risky loan slipped through, saving the bank millions.

E-Commerce Retailer

An online marketplace relied on an AI recommender until complaints poured in: the engine kept pushing the same best-sellers and burying smaller brands. After wiring in bias-detection metrics, the team saw diversity scores plunge below their own threshold. One targeted retraining pass—this time on a balanced catalog—put variety back in the feed and lifted click-through rates by 12 %.

Bottom line: solid governance doesn’t just avert disasters—it also surfaces chances to tune your models and drive even better business results.

Conclusion & Key Insight

Model governance and risk management aren’t red tape—they’re what let AI scale safely and stay valuable. Put version control, MLOps pipelines, always-on drift and bias detection, and a formal risk playbook in place, and model sprawl turns into a coordinated, transparent ecosystem. The guiding principle is simple: manage your AI portfolio with the same discipline you apply to mission-critical software. Do that, and you deliver steady performance, stay ahead of regulators, and gain the confidence to roll out AI across the enterprise.

What to Do Next

Download the Model Governance Checklist

Kick off your audit with a free, step-by-step guide covering versioning, monitoring, and risk assessment.

Schedule a Model Governance Demo

See how a purpose-built MLOps platform can automate your governance, catch drift early, and enforce compliance.

Share & Subscribe

Found this post helpful? Share it with your team, subscribe for more AI best practices, and keep your projects on the cutting edge—safely.

Ready to take control of model drift, bias, and compliance?

Download our Model Governance Checklist now and book a free demo to see how an enterprise MLOps platform automates versioning, monitoring, and reporting- so your AI stays reliable and audit ready!