How Engineering Teams Should Use AI – Without Over-Relying on It

AI in engineering teams can halve dev cycles, but unchecked automation risks quality and creativity.

Introduction

Artificial Intelligence (AI) has become an invaluable asset for engineering teams across

various industries. From speeding up mundane tasks to delivering real-time predictive

insights, AI-driven tools can significantly boost efficiency and innovation. However,

over-reliance on AI can introduce new risks, reduce critical human oversight, and limit

creative problem-solving.

In this article, we’ll explore the key considerations engineering leaders must address

to ensure that AI remains a powerful ally—rather than a crutch—for their teams. We’ll

delve into best practices for strategic AI adoption, discuss how to maintain essential

human input, and outline the risks of unchecked automation.

Key Takeaways

- Identify core AI use cases that align with organizational goals and engineering strengths.

- Retain human oversight to mitigate errors, biases, and over-automation risks.

- Invest in AI ethics and data governance to maintain trust and comply with regulations.

- Continuously update and retrain AI models to adapt to changing data and new challenges.

Balancing Efficiency & Oversight in AI in Engineering Teams

Not every engineering task yields the same return on AI investment. Start by mapping your team’s workflow and pinpointing areas that are:

- Repetitive: Tasks like boilerplate code generation, bulk test-case creation, or routine build-and-deploy pipelines that consume engineering time but add limited unique value.

- Data-Intensive: Operations involving large logs, performance metrics, or telemetry streams—perfect for anomaly detection, predictive maintenance, or usage forecasting with minimal manual effort.

- Error-Prone: Activities such as code reviews, security scans, or compliance checks where human oversights can slip through and introduce risk.

Validate Candidates Against Goals & Constraints

- Impact vs. Effort: Prioritize pilots that promise high time savings or quality improvements with minimal integration overhead.

- Data Availability: Ensure you have clean, well-labeled datasets for training or fine-tuning—AI thrives on structured inputs.

- Scalability: Choose use cases that scale across teams or projects, amplifying your ROI as adoption grows.

Examples in Practice

- Automated Code Reviews: Tools that scan pull requests for security vulnerabilities, style inconsistencies, or performance anti-patterns—freeing senior engineers to focus on architecture.

- Test Generation & Coverage: AI-assisted unit and integration test creation that boosts coverage and reduces regression risk.

- Log Analysis & Alerting: Real-time pattern detection in server logs to surface anomalies before they impact users.

By systematically selecting and validating AI use cases, engineering teams can unlock maximum value—boosting productivity, reducing risk, and freeing human experts to tackle the most creative and strategic challenges.

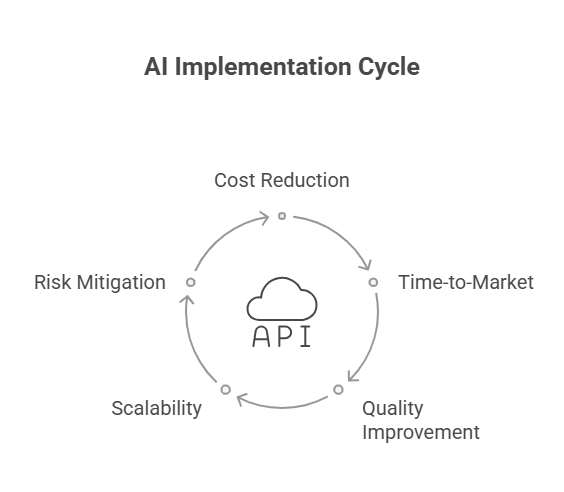

Aligning with Business Objectives

To maximize ROI, every AI initiative should map directly to your organization’s strategic goals. Before launching a pilot, ask:

- Cost Reduction: Will automating this task lower headcount burden or eliminate expensive manual processes?

- Time-to-Market: Can AI-driven acceleration shave weeks or months off feature delivery cycles?

- Quality Improvement: Does this AI use case catch more defects, reduce rework, or elevate user satisfaction?

- Scalability: Will the solution grow seamlessly as your user base, codebase, or data volume expands?

- Risk Mitigation: Does it enhance security, compliance, or operational resilience?

Steps to Ensure Alignment

- Define Success Metrics: Establish KPIs—like % reduction in bug backlogs, cycle-time savings, or defect escape rate—to measure AI impact objectively.

- Stakeholder Buy-In: Involve product managers, finance, and operations early to validate use case priorities and secure cross-functional support.

- Pilot & Iterate: Run a small-scale proof-of-concept against real data, review outcomes against KPIs, then refine before broader rollout.

- Governance Checkpoints: Schedule decision gates—post-pilot and quarterly reviews—to ensure continued alignment as business goals evolve.

By rigorously tying AI projects to measurable business outcomes, engineering teams can justify investments, demonstrate clear value, and continuously optimize their AI roadmap.

Maintaining Human Oversight

Even the most advanced AI systems have limitations. Some outputs may contain subtle errors

that only an experienced engineer can detect, while other models risk perpetuating biases

present in the training data. Human oversight remains essential for:

- Validating AI outputs: Ensuring final decisions and designs truly meet

quality and safety standards. - Providing contextual judgment: Certain engineering challenges require

creative, out-of-the-box thinking that AI alone may not provide. - Identifying bias: Experienced professionals can better spot biases

or anomalies that might arise from skewed datasets.

By integrating a “human-in-the-loop” approach, engineering teams can leverage AI’s speed

and scale while minimizing unforeseen risks.

Avoiding Over-Reliance on Automation

While AI can accelerate countless tasks, leaning on it exclusively risks atrophy of core engineering skills and can mask deeper systemic issues. If automation handles every step, teams may lose the context needed to diagnose failures, adapt to unexpected edge cases, or innovate beyond the AI’s current capabilities.

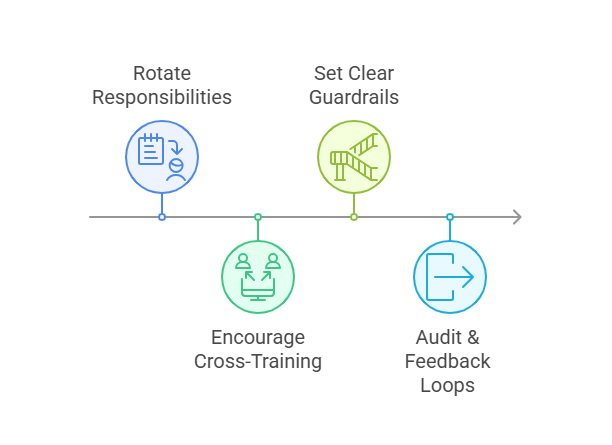

Key Human Safeguards

- Rotate Responsibilities: Schedule periodic “zero automation” sprints where engineers complete tasks—like code reviews, deployments, or testing—manually. This preserves institutional knowledge and ensures the team can operate independently if tools are unavailable.

- Encourage Cross-Training: Pair junior and senior engineers to shadow both AI-driven and manual workflows. This builds shared expertise in prompt engineering, model-ops, and the underlying processes, so no single point of failure compromises productivity.

- Set Clear Guardrails: Define thresholds—such as confidence scores, rate limits, or error budgets—beyond which the AI must be overridden by human intervention. Implement automated alerts and fallback procedures (e.g., switch to cached outputs or escalate to on-call engineers).

- Audit & Feedback Loops: Maintain logs of AI-generated suggestions alongside manual edits. Periodically review these logs in retrospective meetings to identify recurring AI blindspots and update prompts or retraining datasets accordingly.

By embedding these safeguards, engineering teams can harness AI’s speed without sacrificing the human judgment and adaptability that drive long-term innovation.

Ensuring Data Governance & Ethics

Robust data governance and an ethics-first approach are non-negotiable for reliable, trustworthy AI. Engineering teams must treat data as a strategic asset—enforcing standards, securing sensitive information, and proactively mitigating bias.

Core Ethical & Governance Practices

- Enforce Data Standards: Define and document naming conventions, schema versions, and metadata requirements. Automate validation checks (e.g., schema registry, data-quality pipelines) to catch anomalies before they impact model training.

- Secure Sensitive Data: Encrypt data both at rest and in transit using industry-standard protocols (AES-256, TLS 1.3). Implement role-based access controls, audit logs, and tokenization for personally identifiable information (PII) or proprietary code.

- Regular Compliance Audits: Schedule periodic reviews against GDPR, CCPA, HIPAA, or other relevant regulations. Use automated compliance scanners to flag policy deviations and generate actionable remediation tickets.

- Adopt an Ethics-Focused Mindset: Build bias-detection workflows using tools like AIF360 or Fairlearn. Conduct fairness audits across demographic segments, and establish a cross-functional ethics review board to govern model releases.

By combining stringent data controls with ethical guardrails, engineering teams ensure that AI systems remain accountable, compliant, and aligned with organizational values.

Updating & Retraining AI Models

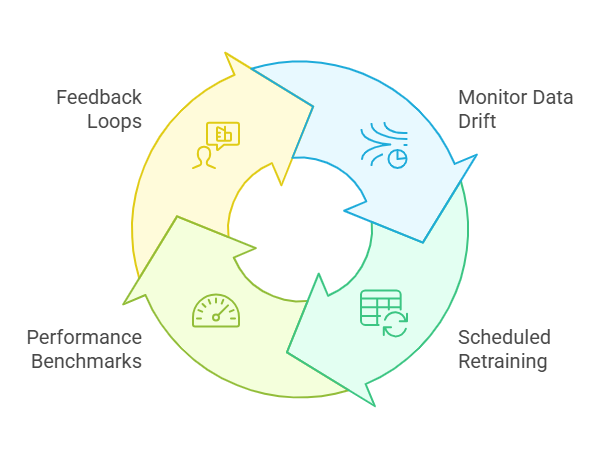

Continuous improvement is key to keeping AI systems accurate and aligned with evolving needs. Without regular updates, models can degrade as data distributions shift or business goals change.

- Monitor Data Drift: Track input feature distributions and prediction statistics. Automated alerts on significant deviations will signal when retraining is needed.

- Scheduled Retraining: Establish a cadence—weekly, monthly or quarterly—based on data volume and volatility. Automate pipelines to pull new labeled data, retrain models, and run validation tests.

- Performance Benchmarks: Before each release, compare metrics (accuracy, F1, AUC) against established baselines. Gate deployments on meeting or exceeding these thresholds to prevent regressions.

- Feedback Loops: Incorporate user or stakeholder feedback into your training data. Flag mispredictions in production and feed them back into the next training cycle.

By embedding retraining and evaluation into your DevOps workflows, AI becomes a living component of your engineering process—continuously learning and improving rather than stagnating.

Cultivating a Culture of Innovation

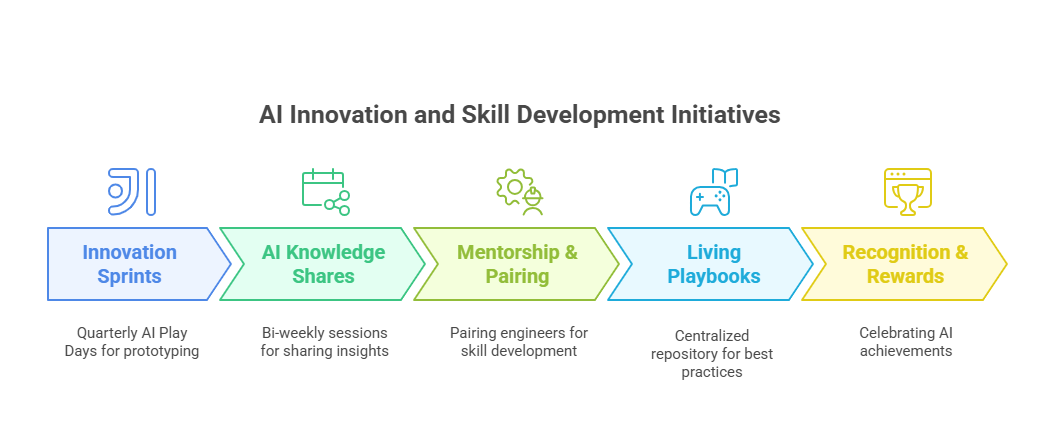

True AI-driven transformation goes beyond tools—it requires a culture that embraces experimentation, continuous learning, and seamless collaboration. By empowering engineers to explore AI in safe, structured ways, you’ll unlock novel solutions while preserving quality and accountability.

Innovation Framework & Practices

- Innovation Sprints: Run quarterly “AI Play Days” where cross-functional teams prototype ideas—chatbots, anomaly detectors, automated test generators—and demo outcomes to leadership.

- AI Knowledge Shares: Host bi-weekly lightning talks or “show & tell” sessions where engineers and data scientists present new libraries, model insights, or lessons learned from failures.

- Mentorship & Pairing: Pair less-experienced engineers with AI specialists for hands-on sessions—covering prompt crafting, model tuning, or pipeline debugging—to build skills across the team.

- Living Playbooks: Maintain a centralized, versioned repository of best practices, prompt templates, and architecture patterns—encouraging contributions from anyone who discovers a useful tip or tool.

- Recognition & Rewards: Feature standout AI experiments in internal newsletters, offer “Innovation Awards” for impactful prototypes, and share metrics on time saved or defects caught.

By embedding these practices into your cadence—combining structured experimentation with transparent sharing and recognition—you’ll cultivate an AI-ready culture that continuously adapts, learns, and pushes the boundaries of what’s possible.

Conclusion

Striking the right balance between AI-driven efficiency and human insight is pivotal for any engineering team aiming to stay competitive. By anchoring AI initiatives to well-defined business objectives, enforcing stringent data governance, and embedding human-in-the-loop checkpoints, you can harness AI’s speed without sacrificing the expertise and creativity that only humans bring.

AI should serve as an augmentation—not a replacement—of your engineers’ skills. Through continuous model retraining, thoughtful ethical guardrails, and a culture that rewards experimentation and collaboration, teams can leverage AI to deliver more reliable, resilient, and innovative solutions.

Embrace AI responsibly, and you’ll unlock new levels of productivity and quality—while preserving the critical human judgment that drives true innovation.

Ready to elevate your engineering processes?

Learn how to seamlessly integrate AI into your workflows without losing your team’s critical expertise. Get in touch for a tailored consultation!