Secure AI Adoption: Balancing Innovation, Compliance, and Trust

Artificial Intelligence represents one of the most transformative forces in business today. Yet for every company racing to deploy AI with compliance, there’s an equally important question that too few leaders are asking:

How do we harness the power of AI without compromising security, privacy, or compliance?

If your organization handles sensitive employee data—salaries, performance reviews, internal communications—or manages confidential financial or customer information protected by regulations like HIPAA, GDPR, or CCPA, the answer cannot simply be “trust the model.” You need structure, governance, and intentional design.

Let’s walk through the blueprint.

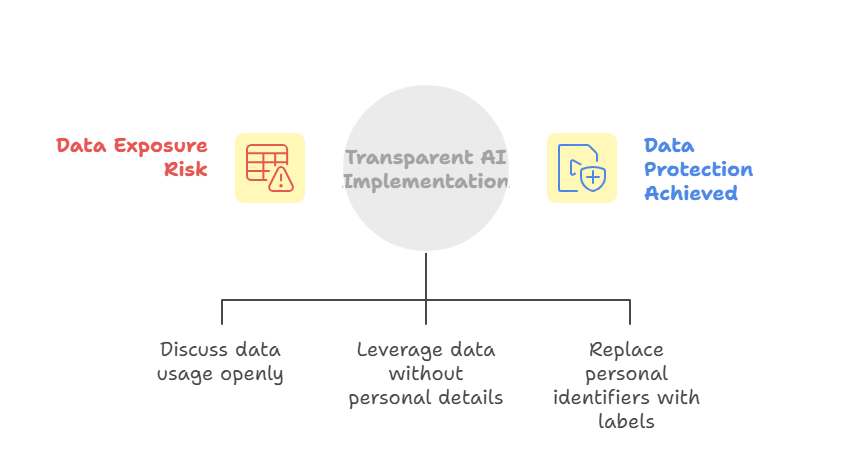

Start with Transparency: Opening the Conversation Internally

Security begins not with encryption, but with honesty.

When introducing AI to your organization, the first step is to have a direct, transparent conversation with your team about what data might be used, why it’s being considered, and how it will be protected.

For example, if you’re analyzing compensation trends or workforce performance, you don’t need to expose individual salaries or names. The goal is to leverage insights in aggregate—to help forecast hiring needs or budget allocations—without exposing personal details.

This mindset shift is crucial: AI adoption and compliance is not about data dumping. It’s about data discipline.

A simple but effective practice is data de-identification—replacing personal identifiers like names or emails with generalized labels such as Department A, Region B, or Customer X. That way, if there’s ever an incident, the real identities remain protected.

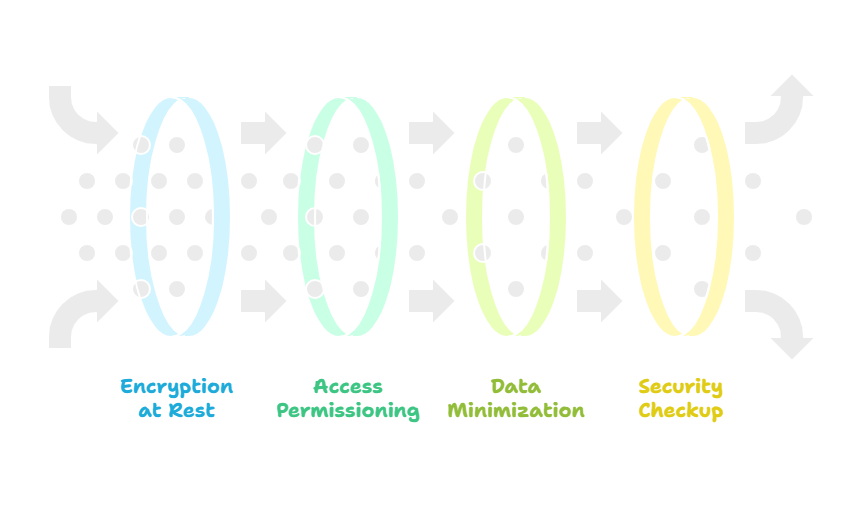

Encrypt, Restrict, and Simplify

Next, let’s talk about control.

Every sensitive dataset—salary spreadsheets, performance reviews, transcripts, or support recordings—should be encrypted at rest. Access must be tightly permissioned, ensuring that only authorized personnel can retrieve data from your training lake or AI repository.

And as a rule of thumb, less is more.

Feed the model only what it truly needs.

If your AI is predicting employee attrition, you might only require role type, tenure, and performance score—not personal contact details. The more selective your data footprint, the smaller your exposure and the cleaner your training outcomes.

If you’re unsure how secure your current data landscape is, take a moment to perform a Data Security & Privacy Checkup.

We offer one freely on our site—a simple, structured way to inventory your datasets and assess potential risk areas before they become liabilities.

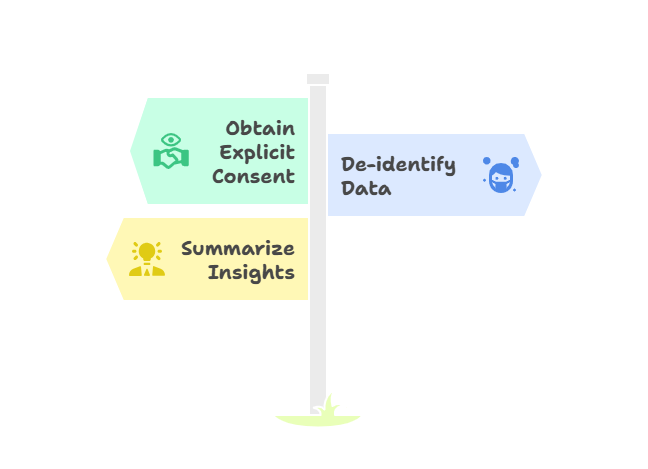

Handle Customer Data With the Care It Deserves

Customer information raises the stakes even higher.

Depending on your industry, you may fall under HIPAA, GDPR, CCPA, or other data protection regulations. But even if you don’t, the question remains: How would your customer feel if their information was used to train your AI?

That’s the standard.

For healthcare organizations, that means confirming explicit consent before using data in any AI context. For others, it means de-identifying conversations, aggregating insights, and ensuring that no personally identifiable information ever becomes training material.

In many cases, you can still extract tremendous value without risk by summarizing or abstracting the lessons from sensitive interactions. Instead of feeding an entire customer transcript, for example, train the AI on the key insights—the distilled, non-sensitive takeaways.

This allows you to learn from your data without ever exposing it.

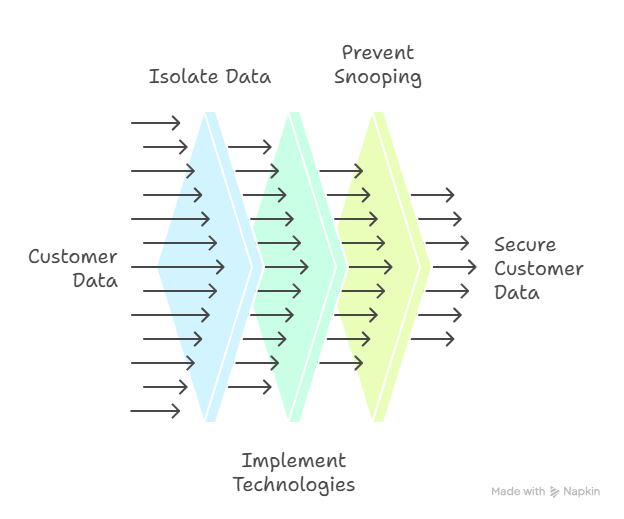

Silo Everything That Matters

Another critical layer of protection is siloing customer data.

Each client’s information should exist within its own isolated environment—never mingled with another customer’s dataset. If you’ve built a fine-tuned model or RAG pipeline for one client, it must remain logically and physically separate from others.

Technologies like vector databases or segmented data stores make this straightforward.

Beyond compliance, this approach guards against a subtler risk: competitor snooping. If an adversarial user were to query your AI platform, you don’t want any possibility—no matter how remote—that proprietary information could surface.

Your guiding principle should be simple: If a piece of data could harm your company or your customer in the wrong hands, it should never live in a shared environment.

Use RAG and Graph RAG for Secure, Real-Time Intelligence

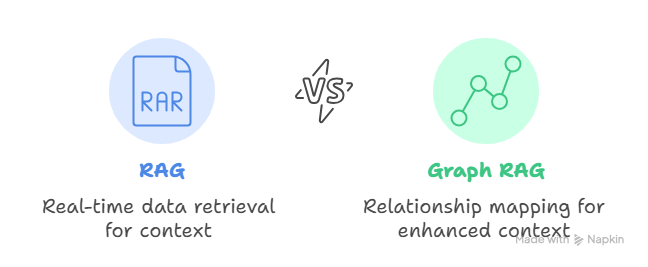

Retrieval-Augmented Generation (RAG) and its evolution, Graph RAG, represent a breakthrough in balancing performance and privacy.

Rather than storing all customer data inside the AI model, RAG allows the system to pull in relevant data in real time—essentially prefacing each AI request with precisely the context it needs, drawn securely from an external database.

Graph RAG goes a step further by mapping relationships between data points, helping AI understand context without directly accessing raw records.

The result is a live, secure, “air-gapped” AI system that delivers accurate, personalized insights without exposing sensitive data to the model itself. It’s the best of both worlds: performance and protection.

Build for Compliance, Then Build for Culture

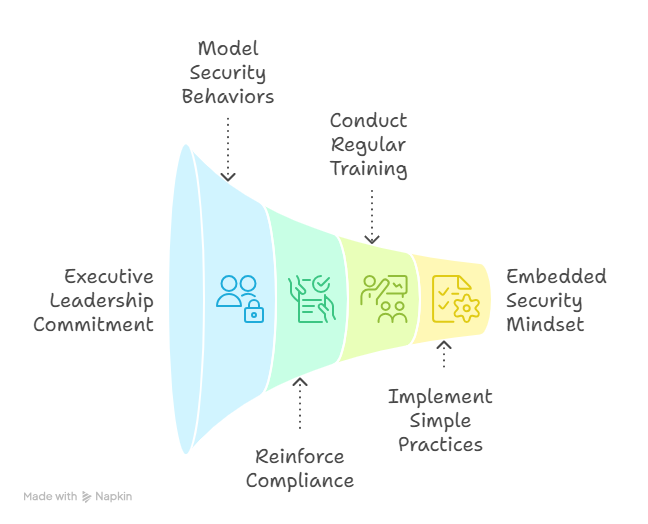

No technology, no matter how advanced, can replace a strong security culture.

Your people—not your platforms—are the first line of defense. The truth is, security only works when it’s embraced from the top down.

Executives must model the behavior they expect: practicing disciplined data sharing, reinforcing compliance requirements, and regularly engaging in short, focused security trainings.

This doesn’t need to be burdensome. A ten-minute monthly briefing, a quick checklist for sensitive data handling, or simple “see something, say something” reinforcement can embed a security mindset across your organization.

The goal isn’t paranoia—it’s professionalism. You’re not protecting data for the sake of compliance; you’re protecting the trust your customers place in your brand.

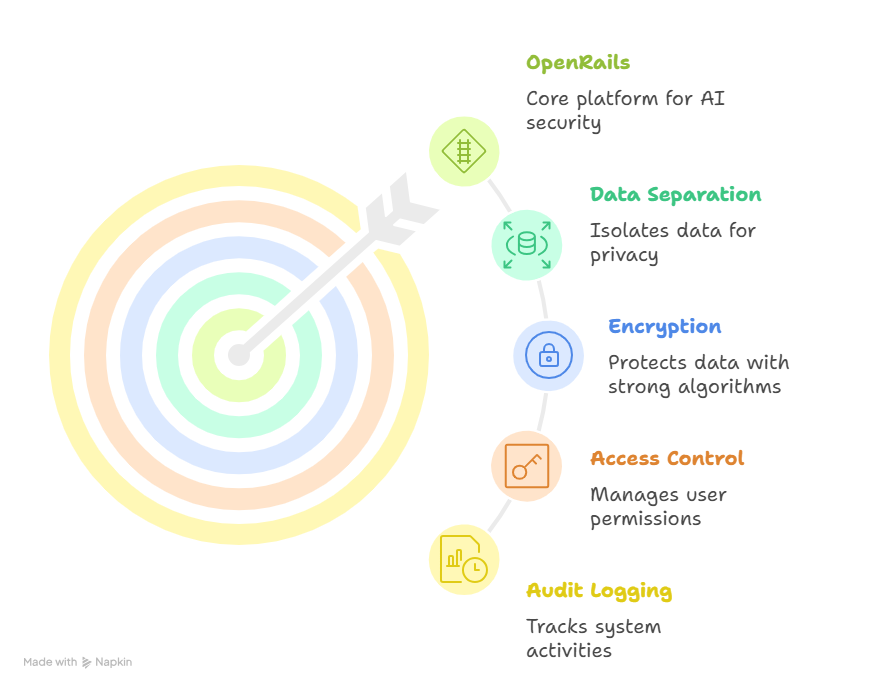

Tools That Simplify Security: The OpenRails Example

At TechLaunch, we built OpenRails, an open-core platform designed to make all of these principles—data separation, encryption, access control, and audit logging—turnkey.

It was born out of necessity: our clients needed a way to move fast on AI adoption without inviting security risk or compliance headaches. OpenRails allows teams to implement guardrails effortlessly, even without a PhD or a large data-engineering staff.

Whether you choose OpenRails or another framework, the point is the same: build your AI on a foundation that respects the law, honors customer privacy, and safeguards your enterprise reputation.

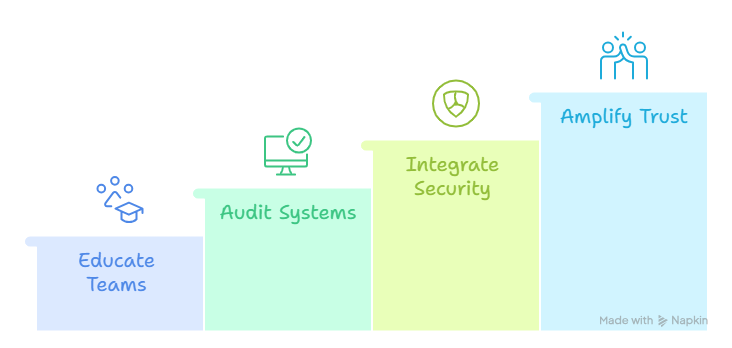

The Culture of Continuous Vigilance

Security isn’t a milestone—it’s a motion.

Threats evolve, regulations shift, and technologies advance. The only sustainable strategy is to stay adaptive. Keep your teams educated, audit your systems regularly, and make security a part of your brand’s identity.

Because at the end of the day, protecting data isn’t just about risk reduction—it’s about trust amplification.

When your customers, partners, and employees see that your organization treats data with care and integrity, it strengthens every relationship you have. And in a world increasingly driven by AI, trust will be your most valuable currency.

Final Thought

AI will redefine how every business operates. But how you protect your data will define how your business is remembered.

Don’t treat compliance as a box to check. Treat it as a competitive differentiator—a sign to the market that you lead with integrity, not just intelligence.

To get started, explore our free Data Security & Privacy Checkup, or enroll your team in our complimentary AI Security Training Course. Both resources are designed to help you build a secure, confident foundation for AI innovation.

Because real innovation isn’t just fast—it’s responsible.