Continuous Improvement & Retraining: Keeping Your AI Models Fresh

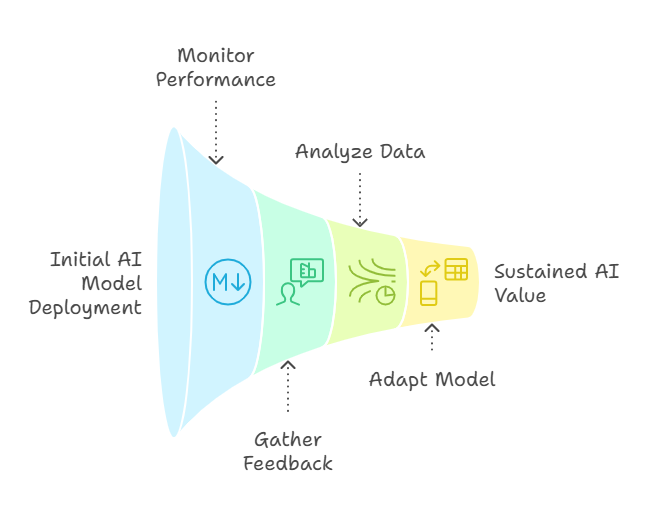

Markets don’t stand still and neither does our data—so our AI models can’t be frozen in time. We need continuous improvement for AI models, treating them like living products: feed them fresh feedback, run quick refresh sprints, and let them learn on the fly. That’s how we keep every prediction sharp and every recommendation relevant.

Why AI Is Never “Done”

Launching a model into production is exciting, but let’s be honest—it’s just opening day, not the end of the season. Customer tastes shift, new data rolls in, and our competitors never stop tweaking their own algorithms. If we freeze our model in place, it drifts out of touch, predictions slip, and trust erodes. The fix? Treat AI like a living product we update and refine constantly, so it keeps pace with the market and keeps delivering real value.

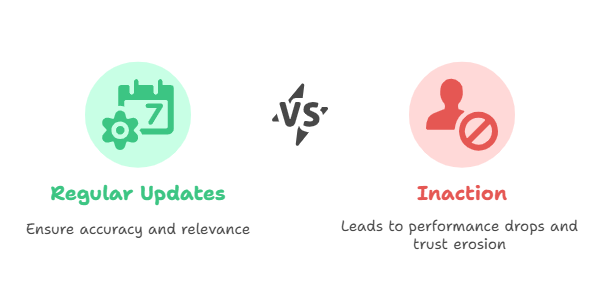

The Cost of Model Stagnation

Here’s the hard truth: if we don’t refresh our models, tiny changes in customer data snowball into big accuracy problems. Think about a sales forecast built on pre-pandemic habits—once buying patterns shift, that model is flying blind. Or a recommendation feed that used to wow shoppers but now feels off-key and annoying. Those slips cost us revenue and, more importantly, chip away at the trust we’ve worked so hard to earn. Inaction doesn’t just hurt today’s numbers; it weakens the confidence people have in everything we do with AI.

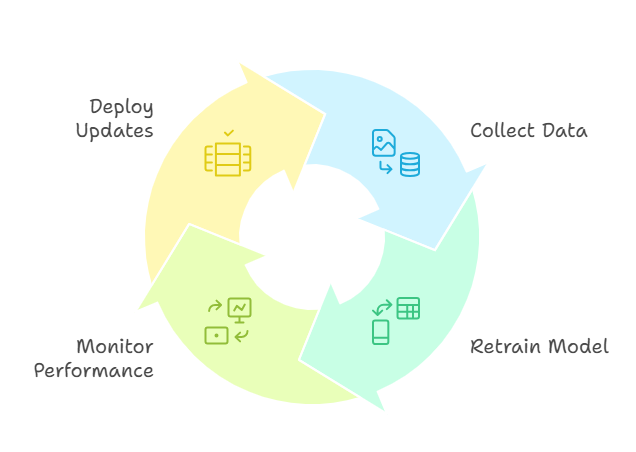

A Four‑Pillar Framework for Continuous Retraining

Here’s how we stay ahead of the curve: put a simple, four-pillar retraining playbook in place so our models evolve right alongside the market.

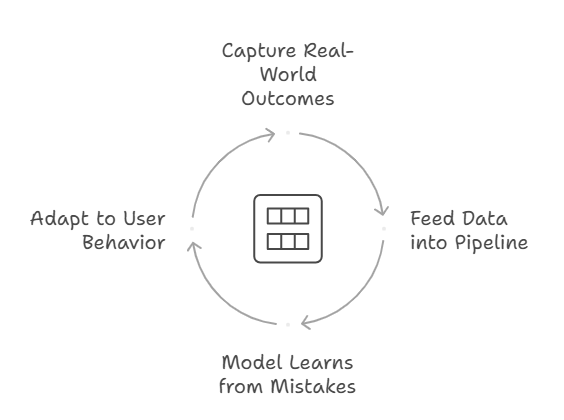

Closed‑Loop Feedback

First, let’s close the loop. Every click, purchase, or error our customers make is a lesson in disguise. We capture those real-world signals and funnel them straight back into the training pipeline, so the model learns quickly from its own missteps and stays tuned to the latest customer behavior.

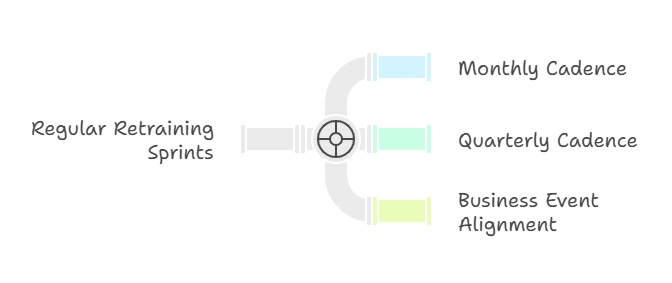

Agile Refresh Cycles

Second, no more “whenever we remember” updates. We run planned retraining sprints—monthly, quarterly, or around key business moments—just like our software releases. Those fixed checkpoints force us to pop the hood, tune the model, and refresh it long before accuracy starts to slip.

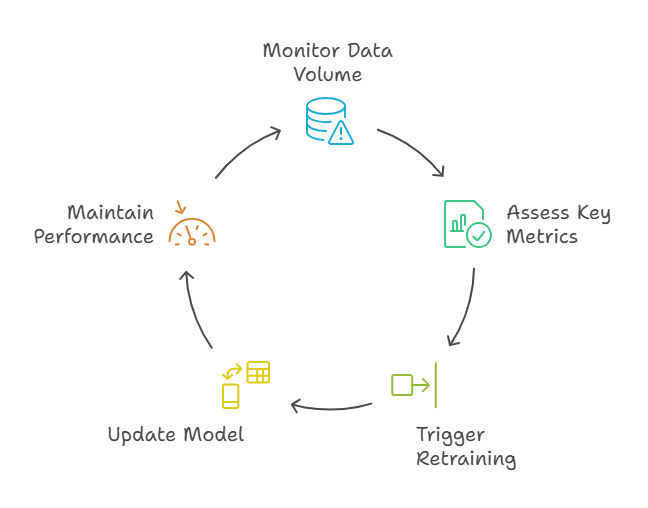

Continuous Learning Triggers

Third, let’s take our hands off the switch and let the system decide when it needs a tune-up. We set clear triggers—say, a flood of fresh data or accuracy dipping below 92 percent—and the platform spins up a retrain automatically. No waiting for someone to notice a problem. The model refreshes itself the moment the numbers tell us it should, so we stay sharp and never fly blind.

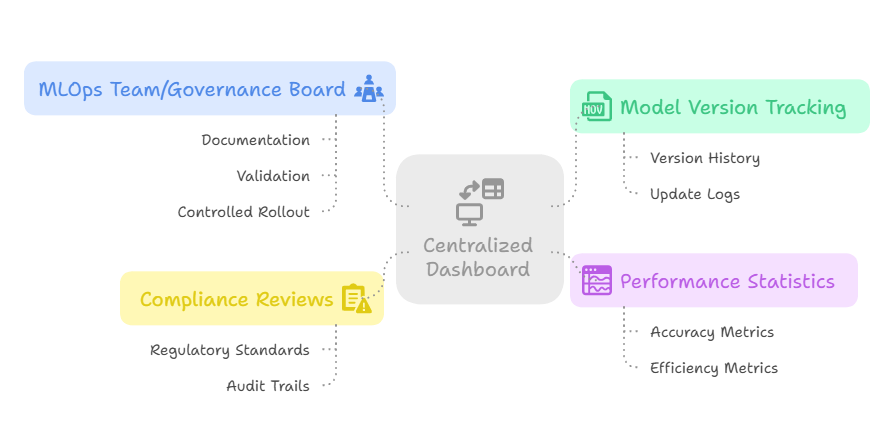

Monitoring & Governance

Finally, we need a single scoreboard everyone can trust. One central dashboard should show which model version is live, how it’s performing, and whether it’s cleared all the compliance checks. Our MLOps crew—or whatever governance body you choose—signs off on every update and logs the details, so we don’t end up with mystery versions floating around or regulators asking awkward questions. In short, clear ownership and transparent metrics keep the whole system tight and audit-ready.

Iterative Improvement in Action

Picture this: we’re running a solid mid-size e-commerce shop. Our new recommendation engine comes out of the gate hot—sales jump 20% and the board is thrilled. Fast-forward six months and the shine is gone: conversions drift south, and the support inbox fills with “Why are you showing me this?” emails.

Here’s how we got it back on track:

- Listen first. We started piping every click, view, and purchase straight back into the model—so it could learn from what customers were actually doing, not what they did last quarter.

- Put retraining on the calendar. On the first business day of every month the model gets a full refresh, no excuses.

- Let the system police itself. If accuracy ever slips below 92 percent, an automated pipeline kicks in and retrains on the latest data—before anyone notices a thing.

- Keep score in one place. A lean MLOps crew watches a live dashboard, signs off on each new release, and makes sure we stay on the right side of compliance.

Result? Conversions bounced back within a couple of weeks and customer gripes vanished. More important, the process now runs on rails, so we’re not scrambling every time tastes change—we’re already adapting in the background.

Embedding Continuous Learning Across the Enterprise

Here’s the simple truth I share with our teams every quarter: an AI model is only as valuable as its last lesson. If we freeze it in time, it starts sliding backward the minute the market moves. But when we make feedback loops, scheduled tune-ups, auto-retraining, and tight oversight part of our operating rhythm, the model grows right alongside our customers. That discipline keeps performance high, spots fresh opportunities early, and turns AI into a living, breathing advantage—not another piece of legacy tech we’ll regret in two years.

Next Steps

Book a Model Lifecycle Consultation

Work one‑on‑one with our experts to tailor a continuous‑learning roadmap for your unique environment.

Share & Subscribe

Help peers discover how to keep their AI solutions fresh and aligned with ever‑changing markets.

Embark on your continuous improvement journey today—and ensure your AI models evolve as quickly as the world around them.

Ready to Keep Your Models at Peak Performance?

Gain instant access to our step-by-step continuous improvement framework and start building automated retraining loops today.