AI Model Monitoring & Maintenance Guide – Keep Production Models Healthy

Introduction

Rolling a new AI model into production always feels like a win—but if we don’t stay laser-focused on AI model monitoring and maintenance, it’s all too easy to slip into “set-it-and-forget-it” mode. I’ve learned the hard way that a live model isn’t a perpetual-motion machine; it needs the same steady care we give our core apps and infrastructure. When data drifts, libraries update, or users push the system in unexpected ways, performance can slide long before anyone notices. Ignore those warning signs and accuracy drops, customers lose confidence, and—worst of all—our brand pays the price.

Key Takeaway

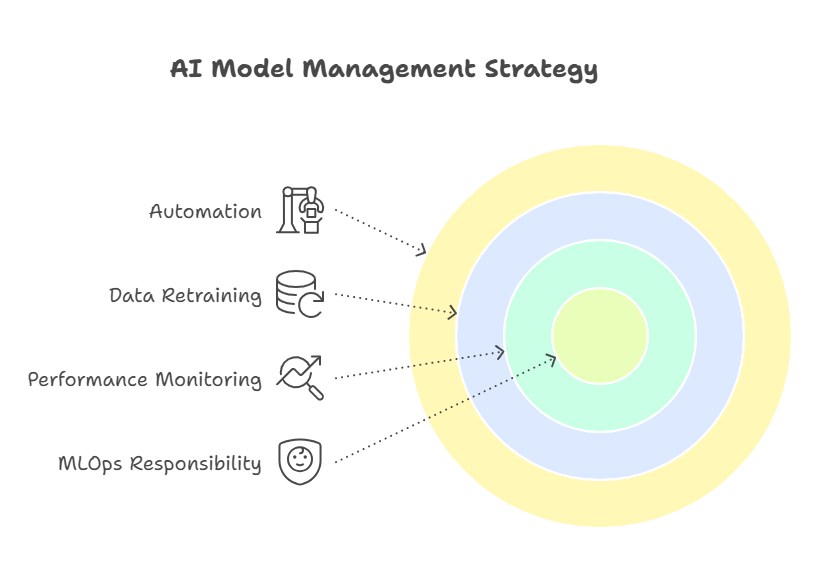

Here’s the reality I share with every team: our AI can’t fly on autopilot. If we’re not watching it, model drift sneaks in and performance slides before anyone notices. That’s why we lean on real-time dashboards, automatic alerts, and even self-triggered retraining to flag problems and fix them fast. We treat upkeep—version control, data audits, all the boring-but-vital routines—as part of the product, not an afterthought. With a dedicated MLOps crew minding the shop, our models stay in lockstep with the business instead of falling behind.

Why Continuous Model Care Matters

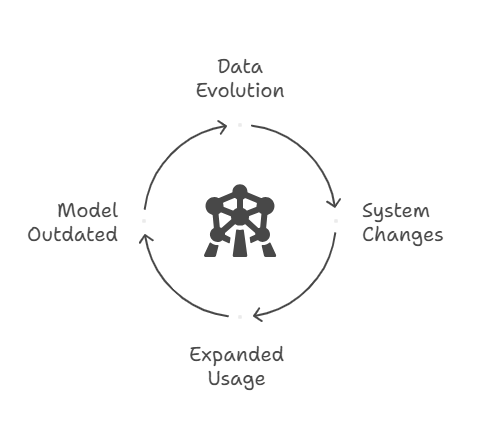

Moving a model from the lab into production is worth a toast—but let’s be clear: that’s the starting line, not the finish. Without disciplined AI model monitoring and maintenance, shifting customer behavior, fresh data streams, and subtle system tweaks will steadily chip away at the accuracy we were so proud of on launch day.

-

The market never sits still. Customer habits, pricing trends, even the weather can shift overnight. A model trained on last year’s data will start to drift the moment those patterns change.

-

The plumbing keeps evolving. Every time we update a library, swap out hardware, or move workloads to a new cloud region, we introduce tiny differences in how the model runs. One minor tweak can snowball into a measurable gap.

-

Teams get creative. Give smart people a powerful model and they’ll dream up new use cases we never planned for. Those edge scenarios are great for innovation—but they also expose blind spots that can trip us up if we’re not watching.

If we ignore those creeping changes, the costs add up—unhappy customers, botched operations, and potential compliance headaches. The cure is simple in principle but critical in practice: build monitoring and maintenance into the model’s life from day one. Treat every model like a living, breathing asset, not a “fire-and-forget” side project.

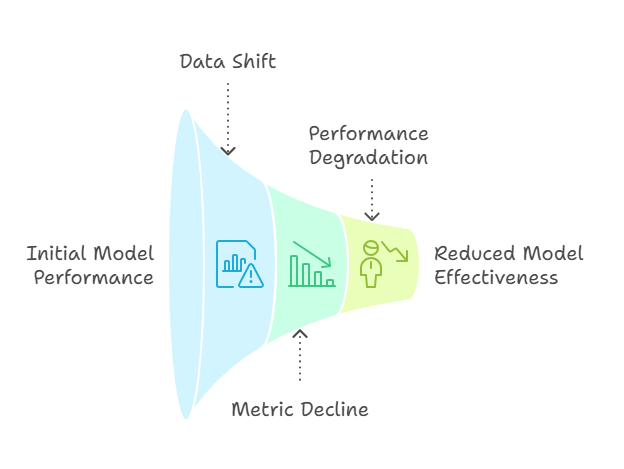

Spotting Drift & Performance Dips Early

Here’s the upside of having rock-solid AI model monitoring and maintenance: we spot trouble while it’s still a blip on the radar, not front-page news. Models almost never implode overnight—they drift, one percentage point at a time. Accuracy dips, latency creeps, error rates tick up—that’s our signal to act. Remember the demand-forecast model built around pre-pandemic shopping habits? Without intervention, it can’t make sense of post-pandemic behavior. Or the segmentation engine that nailed last year’s tastes but now sounds tone-deaf. By tracking those metrics in real time, we jump in early, retrain fast, and keep both the model—and the business—right on course.

Let’s be clear—when a model slips, it’s not just a tech glitch; it’s money out the door and credibility on the line. Picture our pricing engine quietly under-valuing premium products, squeezing margins every single day. Or a fraud filter that lets more bad transactions slip through, chipping away at customer confidence and hiking our risk exposure. The longer we ignore those tiny cracks, the faster they spread—and the harder (and costlier) it is to rebuild trust once things go sideways.

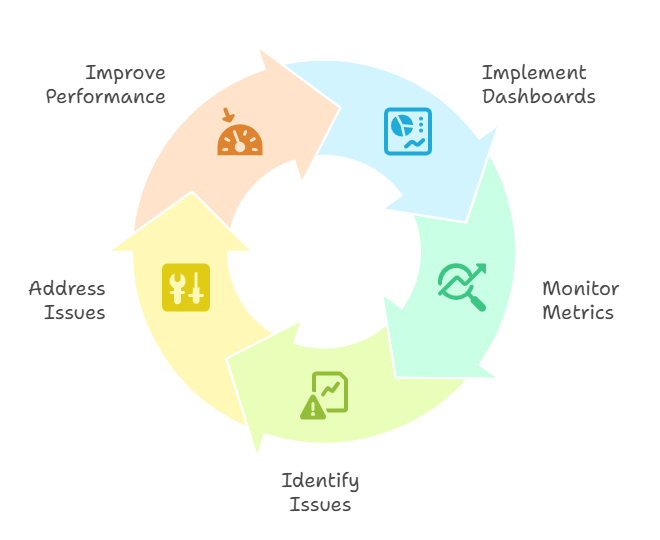

Real‑Time Monitoring Toolkit

That’s why we arm ourselves with live, at-a-glance dashboards. Think of them as the flight instruments for every model we’ve got in the air. Accuracy, latency, volume, error rates—it’s all right there, updating in real time. If a metric starts to wobble, we see it instantly and course-correct long before a customer notices anything’s off. It’s proactive peace of mind, not reactive damage control.

Dashboards are only half the safety net. We also wire up smart alerts that ping us—by Slack, text, whatever works—the second a key metric drifts out of bounds. If accuracy dips under 90 percent or response times nudge past our SLA, the right people know before customers do, armed with a playbook so fixes start immediately.

Even better, much of the heavy lifting can be hands-free. Automated pipelines pull fresh data, retrain the model, and push the new version to production as soon as performance slips. No one is stuck babysitting cron jobs or chasing stale code.

To keep it all humming, we’ve put an MLOps crew in charge. Their sole mandate: guard model health, run regular performance reviews, and jump on anomalies fast. That clear line of ownership lets our data scientists focus on building what’s next, while the business runs on AI we can trust.

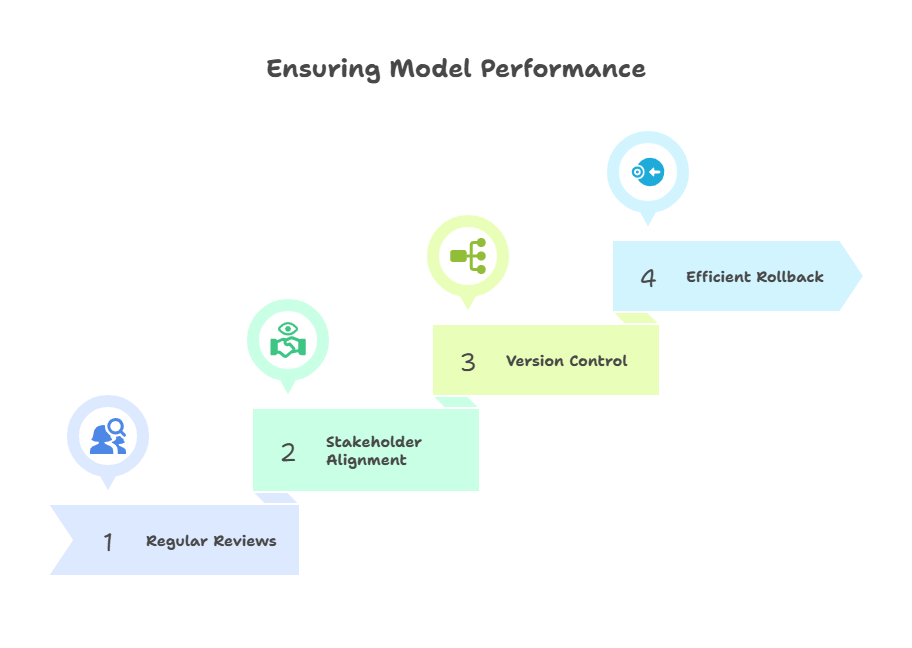

Maintenance Routines for Sustainable AI

Spotting a wobble is only half the battle; fixing it fast is where the real value shows up. We bake model check-ups into our regular operating rhythm—think monthly or quarterly stand-ups where data scientists and business leads sit at the same table. Together we ask the simple question: “Is this model still hitting the numbers we need?” If the answer is no, we agree on a retrain or a quick tune-up and move on.

Just as critical is rock-solid version control. Every tweak gets its own tag in our model registry, so if a hot-off-the-press update flops, we can revert to the last proven build with a single click. No scrambling, no guesswork—just a clean, reliable history that keeps the business moving while we perfect the next release.

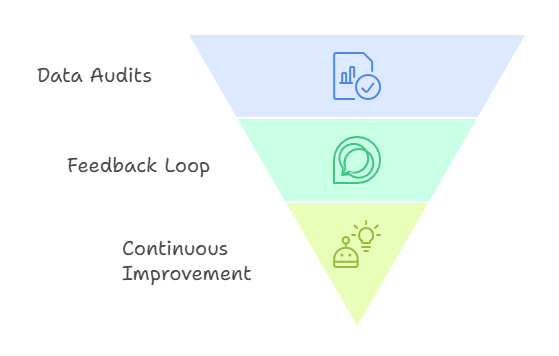

And don’t overlook the basics: run regular data “health checks.” Over time, new feeds creep in, column names change, or a downstream system starts sending odd formats. A quick audit every so often lets us spot those surprises early, clean things up, and keep the model’s fuel—our data—high-grade.

Just as important, keep the feedback lines wide open. When a sales rep notices a weird recommendation or a customer flags an odd prediction, we treat that as pure gold. Those edge-case stories go straight back to the team running the model and shape the next sprint. The result? An AI system that learns not only from numbers, but from the collective experience of everyone who touches the product—which means it keeps getting smarter right alongside the business.

Building a Proactive MLOps Culture

Launching a great model is only half the battle. The real win is keeping that model sharp, trustworthy, and perfectly in tune with the business as it grows. That means ditching the “set-it-and-forget-it” mindset, watching for drift like a hawk, and wiring in real-time dashboards and automated retraining so problems get fixed before anyone feels the pain.

Regular check-ins—performance reviews, version tracking, data spot-checks, and a steady flow of feedback from the field—turn that model into a long-term asset, not a technical fad. Put a focused MLOps team on point, and we can rest easy knowing any dip in accuracy is handled fast, long before it touches revenue or reputation.

Next Steps

- Sign Up for Our AI Monitoring Demo to see how real‑time dashboards, alerts, and retraining pipelines come together in a turnkey solution.

- Explore Our Maintenance Best Practices Webinar for a deep dive into performance review templates, versioning strategies, and data audit frameworks.

Don’t let your AI investment fade after launch. Proactive monitoring and systematic maintenance are the hallmarks of AI done right—so your models continue to deliver business value, day in and day out.

Want to improve AI monitoring and AI health?

Without enhanced monitoring and maintenance, your AI won’t be able to perform how a thriving business needs it to. Schedule your free consultation to start your rise to the top!