Ethical & Transparent AI: Building a Responsible Framework

Learn why ethics and transparency are essential for AI systems, how to avoid reputational and legal pitfalls, and best practices for trustworthy AI.

Introduction

Welcome, everyone! If you’re using or planning to deploy AI in your business, questions about fairness, privacy, and regulation are inevitable. In this post, we’ll explore:

- Why ethics matter in the world of AI.

- The real reputational and legal risks of ignoring ethical AI.

- Examples of what can go wrong when AI isn’t designed responsibly.

- Proven best practices for creating transparent, ethical, and trustworthy AI.

- A unifying insight that ties it all together.

Let’s dive in, because building an ethical AI framework now can save you from big headaches—and bigger expenses—down the road.

Pain: Reputational & Legal Risks

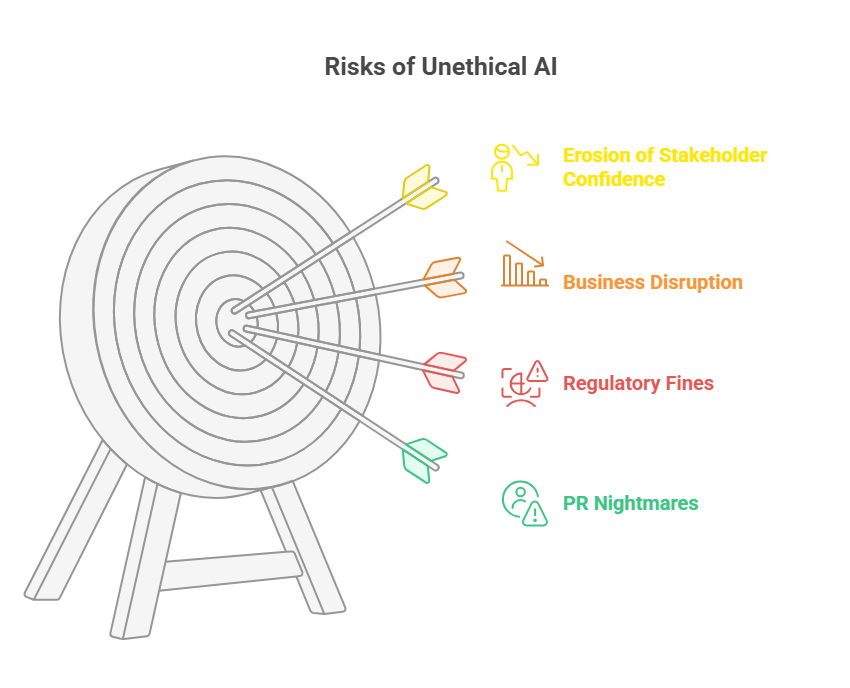

Imagine your AI inadvertently rejects qualified candidates from a demographic group—news spreads instantly, regulators launch discrimination investigations, and suddenly you’re hit with fines or lawsuits. Even well-intentioned models can perpetuate biases hidden in training data, and the fallout can be severe:

- PR Nightmares: Viral headlines and social media backlash spread within hours, undermining customer and investor trust.

- Regulatory Fines: New AI transparency and fairness laws are being enforced globally, with penalties that can reach millions of dollars.

- Business Disruption: Audit investigations, legal defenses, and remediation efforts drain engineering resources and stall product roadmaps.

- Erosion of Stakeholder Confidence: Once partners or clients doubt your AI’s integrity, winning them back becomes a long, uphill battle.

Examples of Ethical and Transparent AI Pitfalls

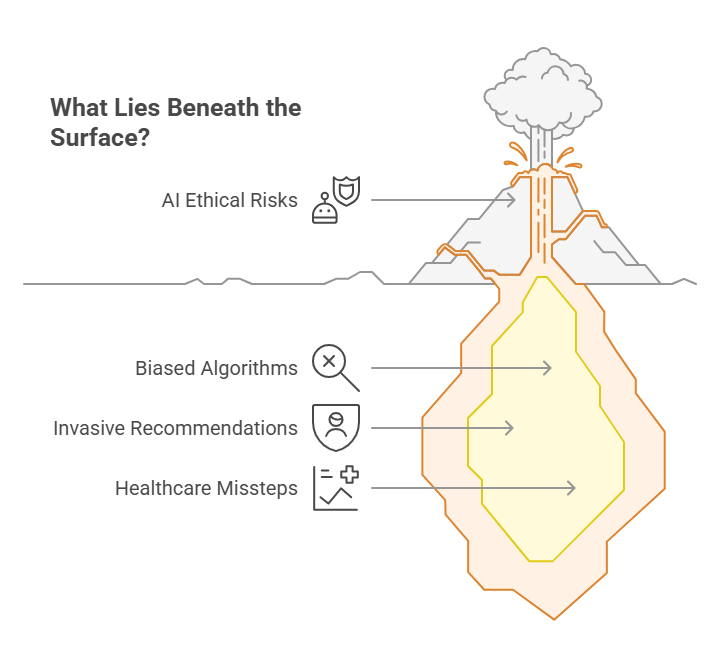

AI isn’t immune to mistakes—when it goes wrong, the consequences can be severe and far-reaching. Here are three all-too-real scenarios:

- Biased Hiring Algorithms: If your training data over-represents certain backgrounds or schools, an AI recruiting tool can systematically screen out equally qualified candidates from under-represented groups. This not only exposes you to discrimination claims but also deprives your organization of valuable talent and diversity.

- Invasive Recommendations: Over-personalized content—like surfacing a user’s health concerns or financial status—can feel unsettling and breach privacy expectations. When recommendation engines mine sensitive signals without consent, you risk alienating customers and triggering data-privacy investigations.

- Healthcare Missteps: In the medical field, a model that misreads patient records can deny critical treatments or suggest inappropriate interventions. Beyond the legal liability, such errors can cause real harm and permanently erode trust in your brand’s ability to safeguard well-being.

Each of these breakdowns chips away at both internal morale and external confidence. Ethical AI isn’t a marketing slogan—it’s a fundamental requirement for building systems people can trust.

Join Our Responsible AI Workshop

Ready for hands-on guidance? In our interactive workshop, we’ll review your AI initiatives, highlight risks, and craft a tailored action plan.

Reserve Your Seat

Best Practices for Responsible and Transparent AI

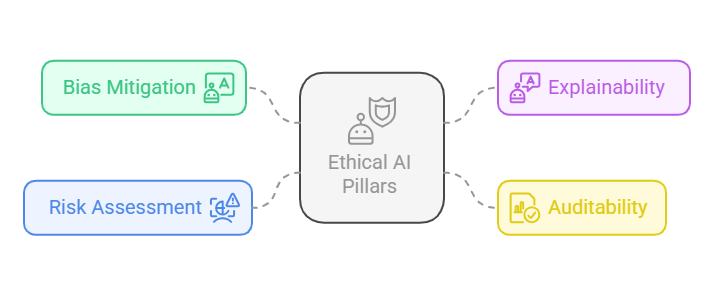

Building AI that users trust and regulators approve requires a proactive, structured approach. Focus on these four pillars to ensure your systems are fair, transparent, and robust:

-

Bias Mitigation: Use diverse datasets and run periodic disparity checks. If you spot skewed outcomes, rebalance your data or adjust training methods.

Involve domain experts to validate fairness goals and incorporate stakeholder feedback into your mitigation strategy. -

Explainability: Favor interpretable models or attach tools like LIME/SHAP that clearly show which inputs drove each decision.

Document these explanations alongside model versions and share them with non-technical stakeholders to foster transparency. -

Auditability: Keep a versioned log of data sources, preprocessing steps, model versions, and inference inputs/outputs for full traceability.

Ensure this audit trail is easily accessible to both engineers and compliance teams for rapid incident response. -

Risk Assessment: Before launch, list potential failure modes—such as out-of-scope inputs or adversarial attacks—and put human review gates or alerts in place.

Revisit and update this risk inventory regularly as new use cases, data sources, or threat vectors emerge.

Cultivating a Culture of Responsibility

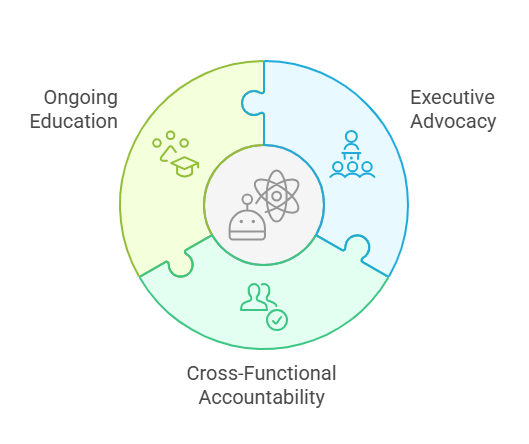

Tools and policies alone won’t suffice unless your entire organization shares a vision of ethical AI. A “culture of responsibility” requires:

- Executive Advocacy: Leadership champions transparency and fairness as core values. They also allocate resources, set measurable ethics goals, and hold teams accountable for AI outcomes.

- Cross-Functional Accountability: Developers, data scientists, compliance, and legal teams collaborate on AI governance. Regular cross-team reviews and shared KPIs ensure ethical considerations are integrated at every project stage.

- Ongoing Education: Regular training sessions, hackathons, and “AI ethics demos” keep awareness high. Curated learning paths, guest speakers, and hands-on labs help employees stay current on emerging ethical challenges.

Conclusion & Next Steps

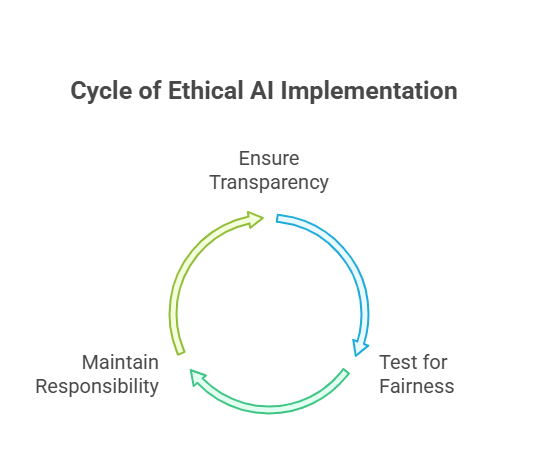

Ethical and transparent AI is a competitive differentiator—and a fundamental business requirement. By embedding fairness, explainability, and accountability into every phase—from data collection to model deployment—you build systems that users trust and regulators approve.

Bonus Resources

- Ethical AI Policy Template: A free, concise guide covering transparency, fairness, and accountability.

- Responsible AI Workshop: Interactive session to review your AI projects and craft a tailored action plan.

- Key Action Items Checklist

- Identify Potential Biases: Regularly audit datasets and outcomes.

- Embrace Explainability: Use interpretable models or generate clear decision reports.

- Maintain Audit Trails: Document data sources, training steps, and updates.

- Conduct Risk Assessments: Proactively map ethical and compliance risks for each AI initiative.

- Foster Ownership: Communicate ethical guidelines and governance processes across teams.

Ready to make your AI both powerful and principled?

Download our free Ethical AI Policy Template and book your complimentary Responsible AI Workshop today—let’s ensure your AI is not only powerful, but principled